Storage on Kubernetes

Kubernetes is fantastic for stateless apps. Deploy your app, scale it up to hundreds of instances, be happy. But how do you manage storage? How do we ensure every one of our hundreds of apps gets a piece of reliable, fast, cheap storage?

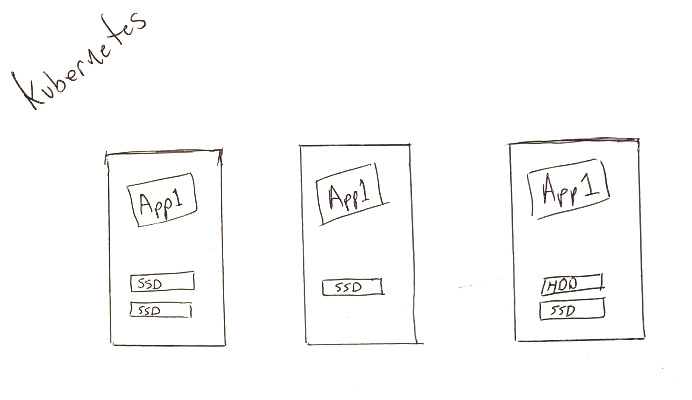

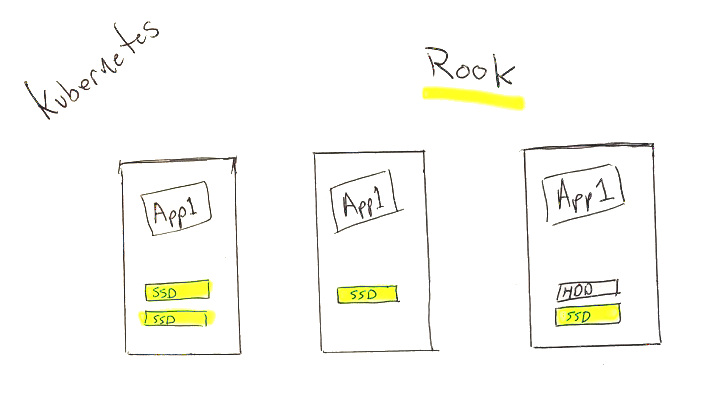

Let's take a typical Kubernetes cluster, with a handful of nodes (Linux servers) powering a few replicas of your app:

Notice our sad, unused disk drives! Kubernetes sure brings a lot of wins, but are we even sysadmins anymore if we don't manage enormous RAID arrays?

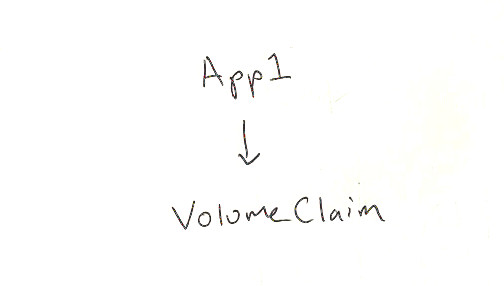

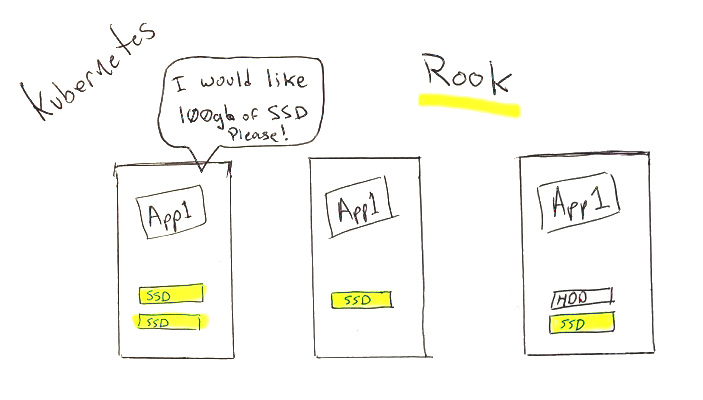

Persistent Volume Claims

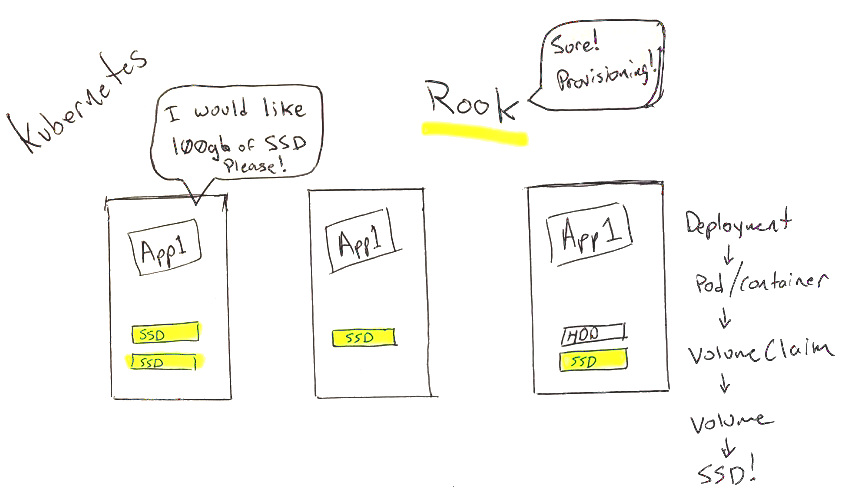

In Kubernetes, we define PersistentVolumeClaims to ask our system for storage. To put it simply, an App "claims" a bit of storage, and the system responds in a configurable way:

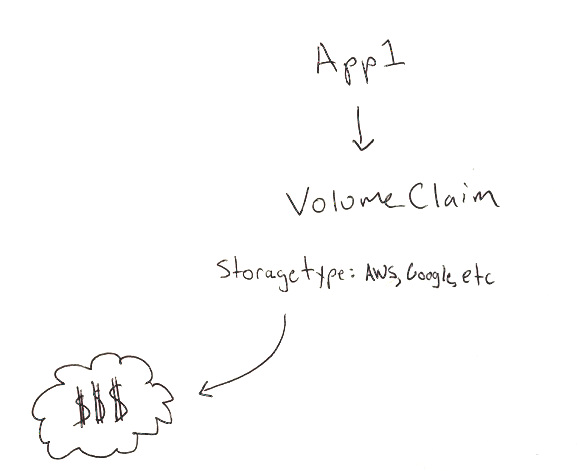

Unfortunately, most Cloud providers are eager to harness the simplicity of Kubernetes by "replying" to your storage request by attaching Cloud Storage (eg: Amazon's EBS).

There are a number of downsides to this strategy for consumers:

- Performance & Cost: EBS Volumes (and other cloud provider's equivalent) performance depends on its size - meaning a smaller disk is the same thing as a slower disk.

- Characteristics: Cloud Providers typically offer HDD, SDD, and a "provisioned IO" option. This limits sysadmins in their storage system designs - Where is the tape backup? What about NVMe? How is the disk attached to the server my code is running on?

- Portability / Lock-in: EBS is EBS and Google Persistent Disks are Google Persistent Disks. Cloud vendors are aggressively trying to lock you into their platforms - and typically hide the filesystem tools we know and love behind a Cloud-specific snapshotting system.

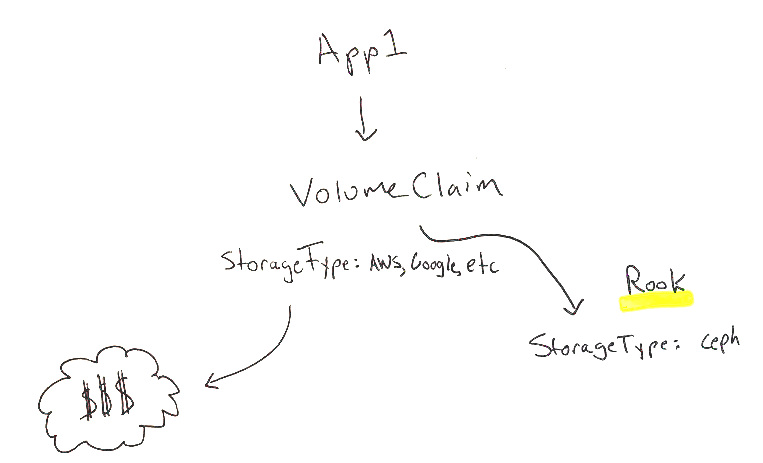

So... What we need then is an Open Source storage orchestration system, which would run on any Kubernetes cluster, and can transform piles of drives into pools of storage, available to our Pods:

Enter Rook.io

What is Rook? From the website, Rook is "an Open-Source, Cloud-Native Storage for Kubernetes" with "Production ready File, Block and Object Storage". Marketing speak aside, Rook is an open-source version of AWS EBS and S3, which you can install on your own clusters. It's also the backend for KubeSail's storage system, and it's how we carve up massive RAID arrays to power our users apps!

Rook and Ceph

Rook is a system which sits in your cluster and responds to requests for storage, but it is not itself a storage system. Although this makes things a bit more complex, it should make you feel good, because while Rook is quite new, the storage system it uses under the hood are battle hardened, and far from beta.

We'll be using Ceph, which is about 15 years old and is in use and developed by companies like Canonical, CERN, Cisco, Fujitsu, Intel, Red Hat, and SanDisk. It's very far from Kubernetes-hipster, despite how cool the Rook project looks!

With Rook at version 1.0, and Ceph powering some of the world's most important datasets, I'd say it's about time we get confident and take back control of our data-storage! I'll be building RAID arrays in the 2020s and no one can stop me! Muahaha

Install

I won't focus on initial install here, since the Rook guide is quite nice. If you want to learn more about the Rook project, I recommend this KubeCon video as well. Once you've got Rook installed, we'll create a few components:

- A CephCluster, which maps nodes and their disks to our new storage system.

- A CephBlockPool, which defines how to store data, including how many replicas we want.

- A StorageClass, which defines a way of using storage from the CephBlockPool.

Let's start with the CephCluster:

The most important things to note here is that a CephCluster is fundamentally a map of which Nodes and which drives or directories on those Nodes will be used to store data. Many tutorials will suggest

useAllNodes: true, which we strongly recommend against. Instead, we recommend managing a smaller subset pool of "storage workers" - this allows you to use different system types (with say, very slow drives) later without accidentally/unknowingly adding it to the storage pool. We'll be assuming /opt/rook is a mount-point, but Rook is capable of using unformatted disks as well as directories.One other note is that a

mon is Rook's monitoring system. We strongly suggest running at least three and ensuring allowMultiplePerNode is false.Now our cluster looks like this:

By the way, you'll want to take a look at the pods running int the

rook-ceph namespace! You'll find your OSD (Object-Storage-Device) pods, as well as monitoring and agent pods living in that namespace. Let's create our CephBlockPool and a StorageClass that uses it:Note that the replicated size is set to 2, meaning we'll have 2 copies of all our data.

We'll wait for our CephCluster to get settled - you'll want to take a look at the

CephCluster object you created:➜ kubectl -n rook-ceph get cephcluster rook-ceph -o json | jq .status.ceph.health

"HEALTH_OK"

Now, we're ready to make a request for storage!

We can request storage in a number of standard ways now, from this point there is zero "Rook-specific" code or assumptions:

Now Rook will see our PersistentVolumeClaim and create a PersistentVolume for us! Let's take a look:

➜ kubectl get pv --watch

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS AGE

pvc-... 1000Mi RWO Delete Bound default/test-data my-storage 13m

So there we go! Successful, fairly easy to use, Kubernetes-native storage system. We can bring our own disks, we can use any cloud provider... Freedom!

Conclusion: Should you use it?

Well, TLDR: Yes.

But the real question you should ask is, am I trying to build the most awesome data-center-in-my-closet the world has seen since 2005? Am I trying to learn enterprise-ready DevOps skills? If the answer is yes, I strongly recommend playing with Rook.

At KubeSail we manage multiple clusters with extremely large and busy Ceph clusters, and so far have been extremely happy in terms of stability and performance. While playing with piles of hard-drives does make me nostalgic for the good ol' days, our platform allows you to have a rock-solid setup for your apps out of the box.

We strongly prefer Open Source tools that avoid vendor lock-in. You should be able to install and configure all of our tools at KubeSail on your own clusters, and we'll continue to write about our stack in a continuing series of blog posts. If you have any questions about the article, Rook, Kubernetes, or really just about anything else, send us a message on Twitter!

Stay in the loop!

Join our Discord server, give us a shout on twitter, check out some of our GitHub repos and be sure to join our mailing list!